Better account for peaks / max resource usage in monitoring

Created by: slimsag

There are brief periods where resource usage on a service may spike and go down in a very short timeframe, and we would like to show/represent such instances because that means you may not wish to e.g. reduce the available memory or CPU to a container.

While there are some fundamental limitations of the way cadvisor and Prometheus work here which prevent this from being completely accurate, we can do some things to improve accuracy in this situation to a point that I believe will generally catch such instances:

- Use

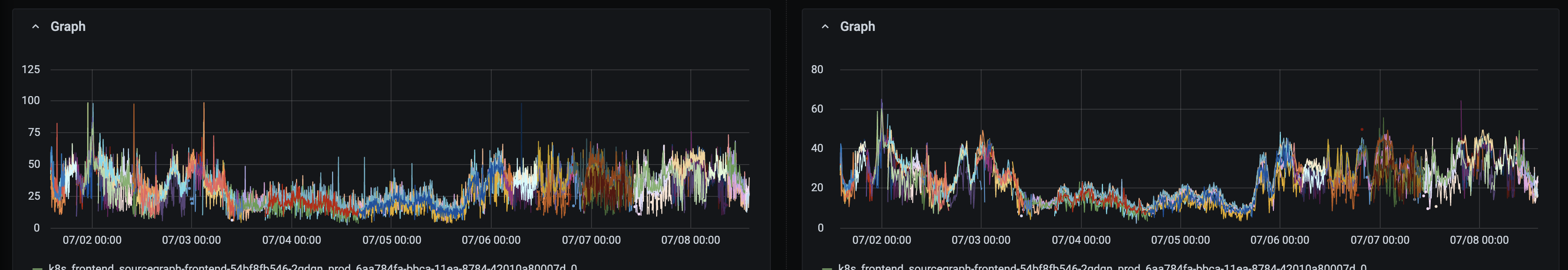

max_over_timeinstead ofavg_over_timein our memory queries and CPU queries which results in picking up spikes more commonly (but not always), example comparison (left is max, right is avg):

- Use an aggressive 100ms step in just the container CPU/memory dashboard panels, e.g. like this - this will not help with alerts but would help with admins viewing the dashboard - as it prevents us from ignoring data points (at the cost of loading many more data points, obviously). In some cases this doesn't matter, while in others it does, example below (left is 100ms, right is auto)

Important note: You may be tempted to think we can use container_memory_max_usage_bytes - but that is not true because it accounts for inactive memor (e.g. non-physical mmap memory) and doesn't match up with the Linux OOM killer, while container_memory_working_set_bytes which we are using today does (and there is no max variant of that provided by cadvisor).